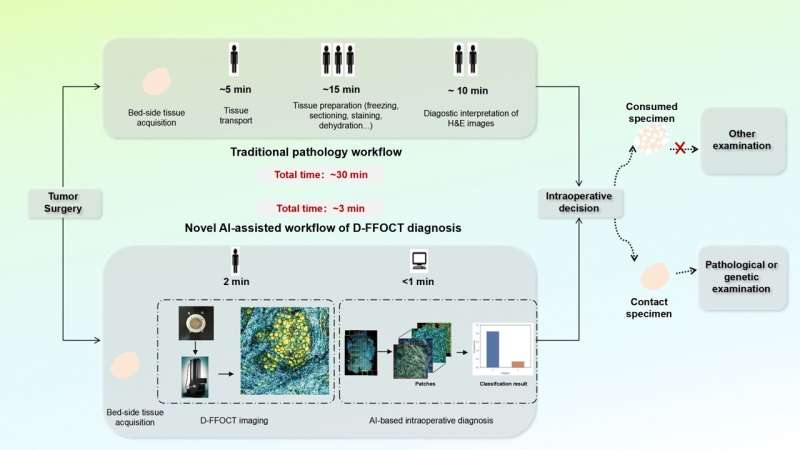

Rapid and accurate intraoperative diagnosis is critical for tumor surgery and can provide precise guidance for surgical decisions. However, traditional intraoperative assessments based on H&E histology, such as frozen sections, are time-, resource-, and labor-intensive, and involve specimen-consuming concerns. D-FFOCT is a high-resolution optical imaging technology capable of rapidly generating virtual histology.

In a recent study published in Science Bulletin, led by Professor Shu Wang (Breast Center, Peking University People’s Hospital), researchers introduced an intraoperative diagnostic workflow. This approach employed deep learning algorithms to classify tumors based on D-FFOCT images, enabling rapid and accurate automated diagnosis.

In a prospective cohort, a total of 224 breast samples were included in their study and imaged using D-FFOCT. The acquisition of the D-FFOCT images was non-destructive and did not require any tissue preparation or staining procedures. The D-FFOCT slides were cropped into patches.

All slides were divided into training set (slides: n=182; patches: n=10,357) and external testing set (slides: n=42; patches: n=3140) according to the collection time order. Five-fold cross-validation method was used to train and fine-tune the model. A machine learning model, employed after feature extraction of patch prediction results, was utilized to aggregate the patch-level results to the slide level.

In the testing set, the diagnostic performance of their model at the patch level was relatively good for determining the nature of breast tissue, with an AUC of 0.926 (95% CI: 0.907–0.943). At the slide level, the overall diagnostic accuracy in the testing set was 97.62%, with a sensitivity of 96.88%, specificity of 100%. No statistically significant differences in accuracy were observed for different molecular subtypes and histologic tumor types of breast cancer.

Visualization heatmaps indicated that deep learning models learned relevant features of metabolically active cell clusters in D-FFOCT images, which was consistent with expert experience. This deep-learning-based image analysis could potentially transfer to various other tumor types, as the features detected in the model appeared to be conserved characteristics in oncology diagnosis. In the margin simulation experiment, the diagnostic process takes approximately 3 min, with the deep learning model achieving a high accuracy of 95.24%.

Based on the above results, this study proposed an intraoperative cancer diagnosis workflow that integrates D-FFOCT with the deep learning model. In simulated intraoperative margin diagnosis, this workflow achieved a total processing time of approximately 3 min, decreasing the time to diagnosis by a factor of 10 compared to conventional intraoperative histology. Additionally, it proved to be remarkably labor-cost-effective.

No tissue destruction occurred during optical imaging and analysis. In summary, this workflow provides a transparent means for delivering a rapid and accurate intraoperative diagnosis and, potentially serving as a potential tool for guiding intraoperative decisions.

More information:

Shuwei Zhang et al, Potential rapid intraoperative cancer diagnosis using dynamic full-field optical coherence tomography and deep learning: A prospective cohort study in breast cancer patients, Science Bulletin (2024). DOI: 10.1016/j.scib.2024.03.061

Citation:

AI algorithms and optical imaging technology: A promising approach to intraoperative cancer diagnosis (2024, May 15)

retrieved 15 May 2024

from https://medicalxpress.com/news/2024-05-ai-algorithms-optical-imaging-technology.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.