Surgeons can now create more interactive educational videos for trainees using a web-based system that uses image segmentation AI algorithms to break down video elements to prompt visual questions and feedback. The system, called Surgment, was presented at the 2024 CHI Conference on Human Factors in Computing Systems in Honolulu, Hawai’i.

A paper describing the research is published on the arXiv preprint server.

Videos are a key component in surgical education, but most videos used in training are consumed passively. Trainees simply watch videos of surgical procedures with little opportunity to engage or interact with the content.

“Surgment leverages the latest techniques in AI to add another tool to surgeons’ educational arsenal, providing trainees with more engaging opportunities to learn before entering the operating room,” said Xu Wang, an assistant professor of computer science and engineering at the the University of Michigan and co-corresponding author of the study.

Before developing Surgment, the research team interviewed and observed four surgical attendings for a total of eight hours to understand how they use image-based questions while coaching trainees and to identify challenges they face.

They found that surgeons create questions based on images from recorded surgeries that target the identification of anatomy, procedural decision-making and instrument handling skills. Time constraints were a common challenge as extracting educational images requires meticulous review of the video to pinpoint the best frame based on visual cues.

“Video-based coaching in surgical education can provide efficient and effective feedback to trainees. However, implementing such systems faces challenges like video processing, storage and time for video navigation. In this study, our newly developed system enables expert surgeons to create visual questions and feedback within surgery videos to enhance trainee learning and skills assessment,” said Vitaliy Popov, an assistant professor of learning health sciences at the U-M Medical School.

All participants agreed that video-based quiz questions with immediate feedback could better prepare trainees for the operating room.

Taking the initial interview and observational study into account, the researchers—led by Jingying Wang, doctoral student of computer science and engineering—developed Surgment with tools to quickly select video frames according to their desired requirements and create visual questions with integrated feedback.

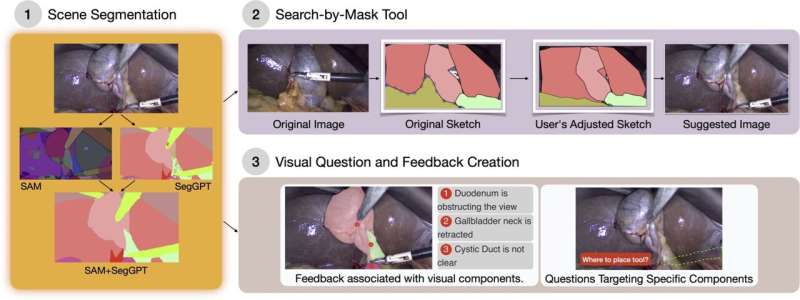

A search-by-mask tool enables video frame selection by breaking video components into editable polygons, allowing users to identify frames of interest and adjust the position, size and shape of elements within the scene. For example, the user may adjust the polygon of “fat” to indicate that they want frames with “less fat occluded to the gallbladder,” and the search tool will fetch images that meet these specifications.

Once retrieved, a quiz-maker tool allows surgeons to create interactive questions targeting operation procedure, anatomy or surgical tool handling using multiple choice, extract a component or draw a path prompts while offering visual feedback.

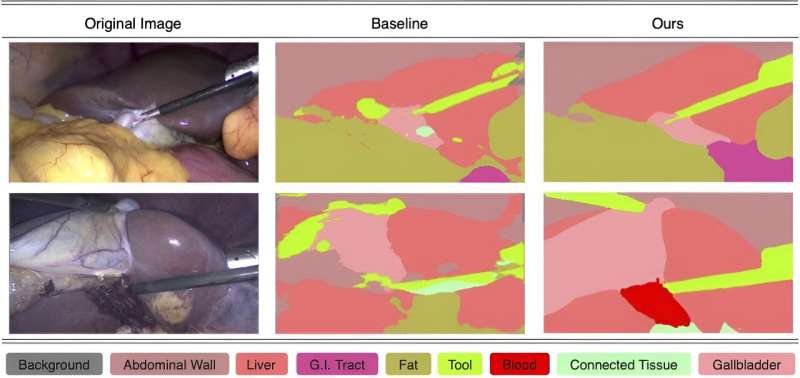

Surgment is powered by a few-shot-learning-based pipeline—a type of machine learning model that learns to generalize from a small dataset—that combines two models known as SAM and SegGPT. The researchers combined the two models as they found it helped compensate for the errors each would make on their own.

The pipeline achieved a 92% accuracy when tested on two public surgical datasets, surpassing the leading regression models, UNet and UNet++ which scored 73% and 76% accuracy respectively.

The search-by-mask tool retrieved images that met user requirements with 88% accuracy, far surpassing the 31.1% accuracy of the only other tool that offers this capability.

The research team performed an evaluation study with 11 surgeons. They all determined the tool to have high educational value and the majority reported that it saved time, as direct searching eliminated the need to watch videos.

“All participants spoke highly of Surgment’s capability in easily offering visual feedback on anatomies,” said Jingying Wang.

One study participant said, “The visual feedback gives additional repetitions of recognizing anatomical structures. There’s the textbook diagram for what anatomy is supposed to look like, but in every single human being it looks just slightly different.”

“It’s rewarding to see that surgeons are able to create questions they believe to be of high educational value with Surgment. Many surgeons said that the questions they created resembled those they had asked or had been asked during an operation,” said Xu Wang.

When discussing next steps to improve Surgment, some surgeons noted that the tool has a learning curve for use and that a finer-grained segmentation of the images would be helpful to offer highly targeted feedback. They also emphasized that Surgment can improve skills that can be learned before entering the operating room, but 3D perception and decision making need to be learned in person.

“This work demonstrates the power of AI tools to enhance educational resources both in medicine and beyond,” said Xu Wang.

More information:

Jingying Wang et al, Surgment: Segmentation-enabled Semantic Search and Creation of Visual Question and Feedback to Support Video-Based Surgery Learning, arXiv (2024). DOI: 10.48550/arxiv.2402.17903

Citation:

New tool can help surgeons quickly search videos and create interactive feedback (2024, May 15)

retrieved 15 May 2024

from https://medicalxpress.com/news/2024-05-tool-surgeons-quickly-videos-interactive.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.